Bridging Linguistic Divides: How Neuro-Symbolic Rules Generalizes Across Code-Mixed Languages

In our increasingly interconnected world, where digital communication often blurs linguistic boundaries, code-mixing, which is nothing but integration of two or more languages in a single utterance, has become a pervasive phenomenon.

From "Hinglish" in India to "Spanglish" in the US, "Taglish" in the Philippines, and "Arabizi" across the Middle East, these vibrant linguistic hybrids reflect dynamic cultural exchanges.1 Developing AI models capable of understanding and processing these complex language forms is crucial. Our neuro-symbolic framework, initially developed for Hinglish, presents a compelling case for its broader applicability, demonstrating how it can effectively generalize across diverse code-mixed scenarios.

The Power of Adaptability: Neuro-Symbolic Design

The core strength of our approach lies in its neuro-symbolic architecture, a powerful fusion of deep learning's statistical prowess and symbolic AI's rule-based precision.2 This dual nature is what makes it inherently adaptable.

Imagine the framework as having two main engines:

The Rule Engine (Symbolic Component): This part operates on explicit linguistic rules. For Hinglish, it might contain rules about where English words typically get inserted into Hindi sentences, or common grammatical shifts.

The Learning Engine (Neural Component): This is powered by neural networks that learn complex patterns from vast amounts of text data. These networks create "embeddings"—numerical representations of words and phrases that capture their semantic and syntactic relationships.3

The beauty is that both engines are modular and customizable.

Customization for New Languages: Beyond Hinglish

When shifting from Hinglish to, say, Spanglish (Spanish-English code-mixing), the process is remarkably streamlined:

Customizing the Rule Engine:

We don't need to rebuild the entire system. Instead, we customize the symbolic rule component using language-specific lexicons and syntactic patterns. For example, a rule for subject-verb agreement might need slight adjustments based on Spanish grammar, or we'd update the dictionary to include Spanish and English words.

The fundamental concept of identifying language switches or noun-phrase insertions remains, but the specific linguistic items change. For example , Consider the common code-mixing pattern where a noun is switched.

In Hinglish, one might say, "Mujhe naya laptop chahiye" (I want a new laptop). In Spanglish, it could be, "Quiero una pizza" (I want a pizza). While the specific words are different, the underlying syntactic pattern (inserting an English noun into a Spanish/Hindi sentence) is structurally similar.

Symbolic rules can be adjusted to recognize these specific linguistic tokens and their permissible positions.

Retraining the Learning Engine:

The neural layers are then retrained on data from the new code-mixed corpus. If we're working with Spanglish, we'd feed the model millions of Spanglish sentences. This allows the neural network to learn the unique contextual nuances, semantic relationships, and even subtle emotional tones present in that specific code-mixed environment.

The "suitable embeddings" developed during this retraining process are crucial, as they provide the rich, contextualized numerical representations of words and phrases unique to Spanglish. For example, in English, "cool" can mean temperature or trendy. In Hinglish, "cool" might be used similarly. In Spanglish, "chévere" (Spanish for cool/awesome) might have a similar embedding space to "cool" in English, allowing the neural network to understand their functional equivalence in code-mixed contexts, even if one word is in English and the other in Spanish.

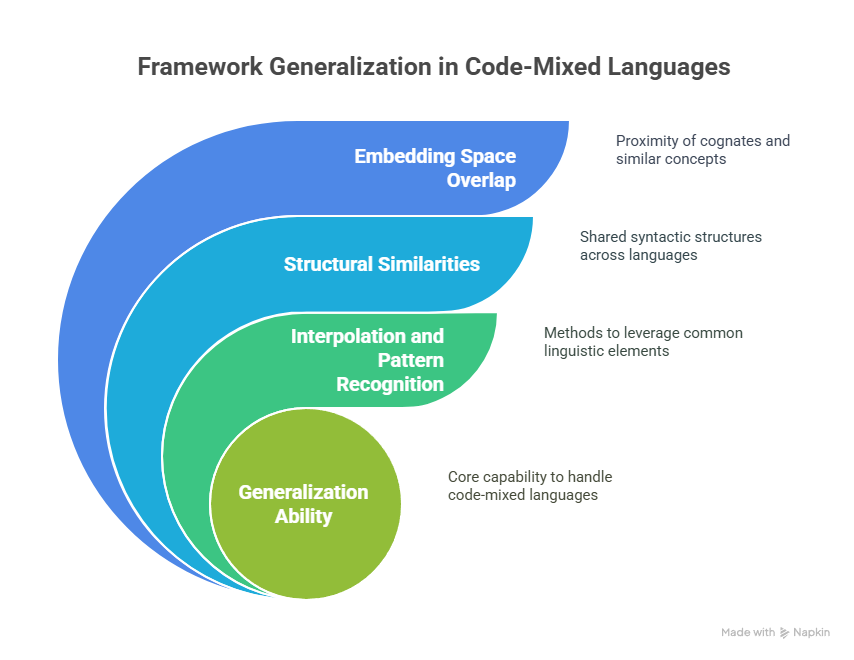

Generalization Through Shared Structure and Interpolation

Our framework's ability to generalize effectively to a wide range of informal, hybrid language scenarios isn't about conjuring entirely new linguistic knowledge. Instead, it's a sophisticated form of interpolation and pattern recognition, leveraging the common elements between languages and the statistical regularities learned from data.

Structural Similarities: Many code-mixed languages, particularly those involving English, share similar syntactic structures (e.g., Subject-Verb-Object word order). If a model learns to identify language switch points in Hinglish at a verb boundary, it can often apply a similar logic to Taglish, where the same structural patterns might emerge.

The "minimal rule modifications" are possible precisely because these underlying structural parallels exist.

Embedding Space Overlap:

When languages share cognates (words with common origins) or semantically similar concepts, their embeddings can reside in close proximity within the high-dimensional space learned by the neural network. This allows the model to infer relationships even when words are from different languages.

For instance, the embedding for "libro" (Spanish for book) might be closer to "book" than to "house," allowing the neural layers to understand the semantic flow across a Spanglish sentence like "I want to read a libro."

This nuanced generalization makes our approach particularly well-suited for the dynamic and informal nature of social media communication, where fluid code-mixing is the norm.

It allows the model to effectively process, analyze, and even generate content in these hybrid linguistic landscapes, bridging communication gaps and enhancing our understanding of global online interactions.