First, Let's understand : ‘What is a Compositional System ?’

Our life if made up atomic knowledge..

Our life if made up little units of Knowledge …

Using these units , which come blocks of knowledge, we build our life ..

We build new things , new ideas and new structures in our life ..

If A.I have to good at reasoning , thinking and making things like this..

It need to have Good ‘Compositional Structure’.

We search , We explore , We find solutions , So does the Algorithms do !

We find suitable solutions , Appropriate Options , So do the Algorithms do !

We try to optimize , Learn to find best alternatives , So does the Algorithms do !

This is akin to Compositional Search ..

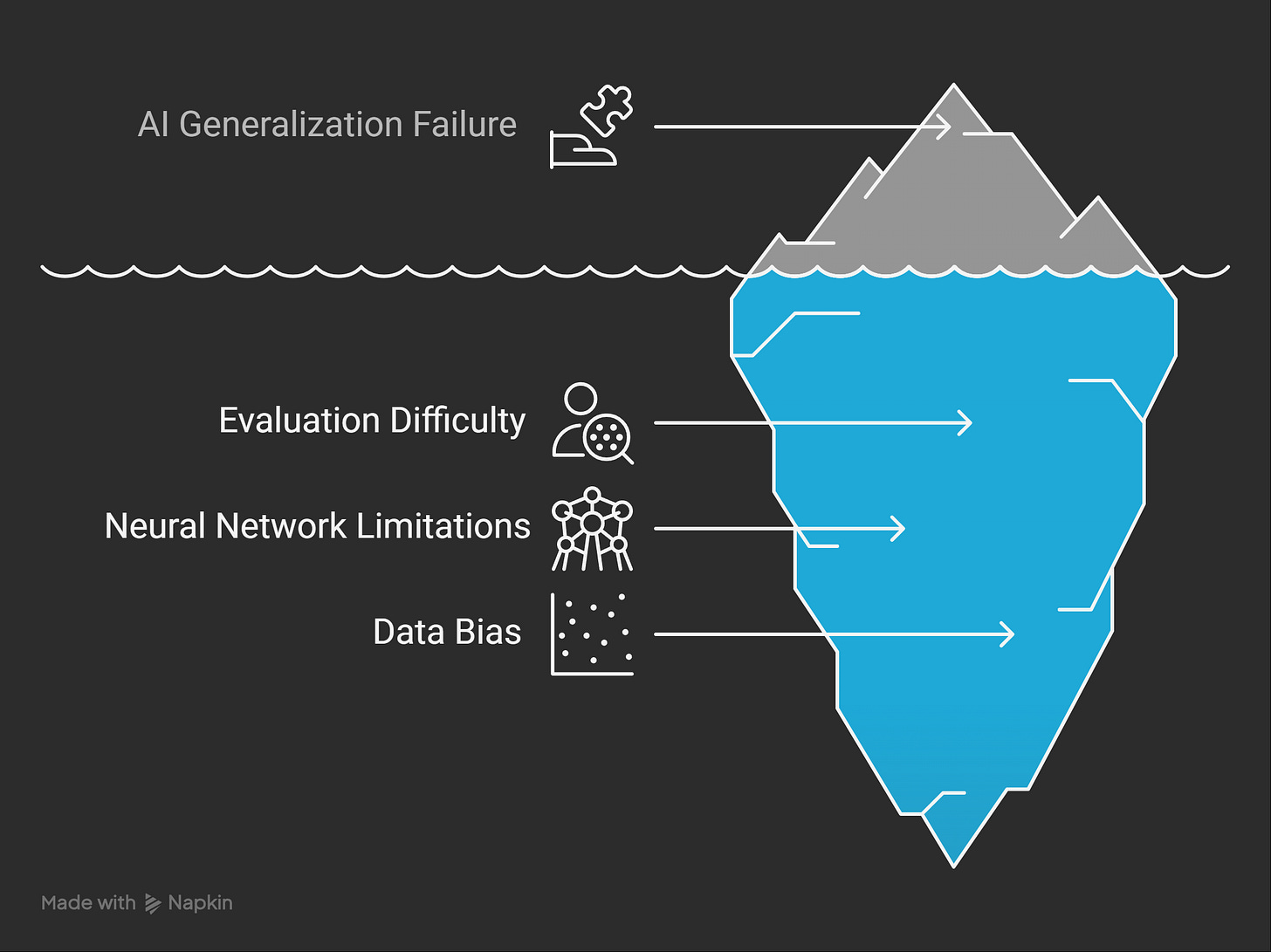

Yes, the domain of AI is actively grappling with several key challenges related to compositional search and learning. These challenges hinder the development of AI systems that can truly understand, reason, and generalize like humans. Here are some of the main ones:

1. Systematic Generalization (Novel Composition):

The Core Problem: AI models often struggle to generalize to novel combinations of familiar components or concepts that were not explicitly seen during training. While they might learn to recognize "red" objects and "wooden" objects, they may fail to understand or correctly process a "red wooden block" if this specific combination wasn't in their training data.

Evaluation Difficulty: Creating benchmarks that reliably measure systematic generalization is challenging. It's difficult to ensure that models aren't just relying on superficial statistical correlations rather than true compositional understanding.

Neural Network Limitations: Standard neural network architectures sometimes struggle to disentangle and recombine features in a systematic way. They might learn holistic representations that don't easily break down into meaningful parts.

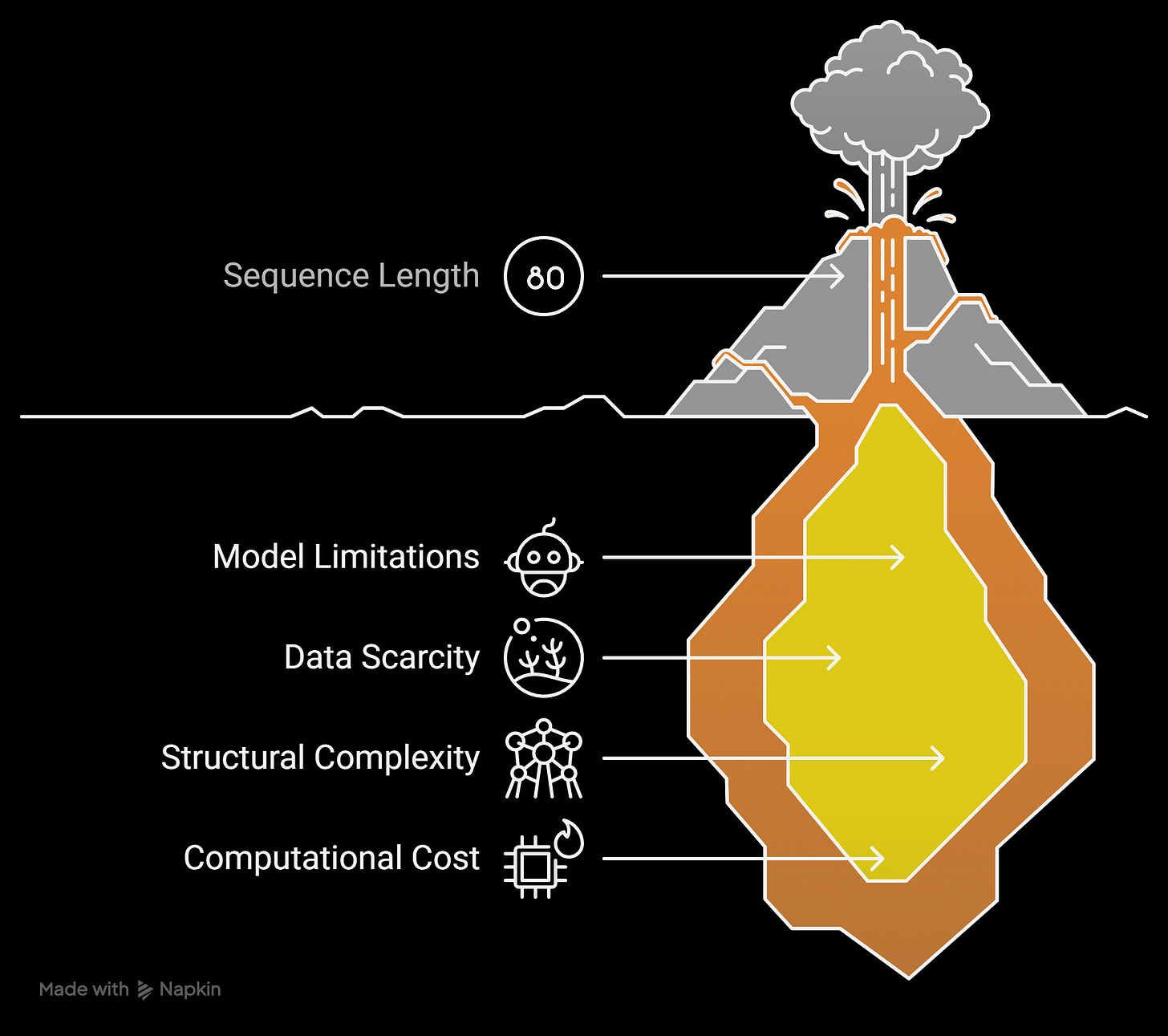

2. Productivity or Length Generalization:

Handling Longer Sequences: Models trained on short sequences often perform poorly on significantly longer ones, even if the longer sequences are built from the same basic elements and rules. For example, a language model trained on short sentences might fail to understand or generate much longer, grammatically correct sentences with nested structures.

Recursive Structures: Dealing with recursive or nested compositional structures poses a significant challenge. Understanding how a component can be embedded within another instance of itself (like "the cat in the box on the table") requires a level of structural understanding that many current models lack.

3. Substitutivity (Synonymity):

Understanding Semantic Equivalence: Models can struggle when familiar components are replaced by synonyms or semantically equivalent terms within a novel composition. For instance, if a model learns "a big dog," it might not automatically understand "a large canine" if it hasn't seen this exact phrasing in training.

Context Dependence: The meaning of a component can change based on the context in which it's used. AI systems need to understand how the surrounding elements influence the meaning of individual parts within a composition.

4. Localism vs. Globalism in Composition:

Contextual Influence: The principle of compositionality suggests that the meaning of a whole is a function of the meanings of its parts and how they are combined. However, in reality, the global context can significantly influence the meaning of local components. AI needs to learn when to rely on local composition and when to consider the broader context.

Non-Local Dependencies: Some compositions involve dependencies between elements that are not immediately adjacent, making it harder for models with limited receptive fields to capture these relationships.

5. Overgeneralization:

Rules vs. Exceptions: Models can overgeneralize learned rules to cases where exceptions apply. For example, a language model might learn the regular past tense rule (add "-ed") so well that it incorrectly applies it to irregular verbs (e.g., "goed" instead of "went").

Idiomatic Expressions: Understanding idioms, where the meaning of the whole is not a simple composition of the meanings of the parts ("kick the bucket"), remains a challenge.

6. The Need for Better Benchmarks and Evaluation Metrics:

Faithful Evaluation: Current benchmarks might not always faithfully assess true compositional understanding, and models might achieve good performance by exploiting dataset biases or learning superficial patterns.

Comprehensive Metrics: There's a need for more comprehensive metrics that go beyond simple accuracy and can evaluate different facets of compositionality like systematicity, productivity, and substitutivity.

7. Lack of Systematic Theoretical and Experimental Methodologies:

Understanding Underlying Mechanisms: There's a lack of a deep theoretical understanding of how and why certain models exhibit (or fail to exhibit) compositional abilities. This makes it harder to design models with strong compositional generalization.

Guiding Research: Without robust theoretical frameworks, it's challenging to develop systematic experimental methodologies to analyze and improve compositional learning in AI models.

In nut shell , this domain is related to the following problems :

How to effectively decompose complex problems.

How to efficiently explore the vast space of possible combinations.

How to ensure that the composed solutions are meaningful and coherent.

How to enable AI to learn and generalize compositional abilities to new, unseen problems.

Addressing these challenges requires innovative approaches in model architecture, learning algorithms, data representation, and evaluation strategies. Research in areas like neuro-symbolic AI, meta-learning, and the development of more structured and interpretable models are all aimed at tackling these fundamental hurdles in achieving truly compositional intelligence in AI systems.