Recently, I see a lot of articles and video shots telling me that Yann LeCun is done with LLMs.

He does not look forward to this technology stack for building a new breed of intelligent technology.

It seems that the core philosophy behind Yann LeCun's recommendations is a shift away from models that primarily learn by predicting surface-level patterns in massive datasets (like text) towards models that learn representations of the world, can predict future outcomes, and perform planning.

Here's a detailed look at each recommendation and the likely reasoning:

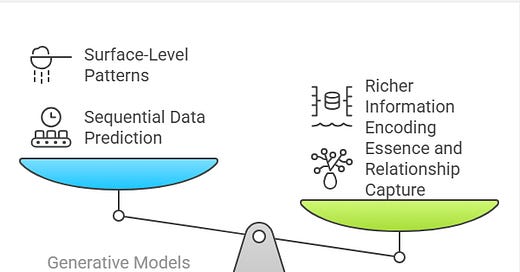

Yann LeCun : Abandon generative models in favour of joint-embedding architectures

What it implies: Stop focusing on models that are trained primarily to generate data, typically token by token (like auto-regressive LLMs predicting the next word), and instead focus on architectures that learn to map different pieces of data (or different aspects of the same data) into a shared, meaningful vector space (an "embedding"). Joint-embedding predictive architectures (JEPAs) are a key example from LeCun's work, where the model predicts the embedding of a masked-out part of the data based on the embedding of the available parts. This is about learning representations that capture essence and relationship rather than just surface-level sequential patterns. A good embedding space allows you to compare different types of data, find analogies, understand concepts, and see how different pieces of information relate to each other, regardless of their original form or sequence. It's a denser, potentially richer way of encoding information than just predicting the next item in a sequence.

What do I think ?

Yes , For me also, it seems to be the right direction . I have been working to make language models for low resource languages such as Punjabi , Bhojori and through experimentation and through the process of enquiry into what I'm doing , I always felt that the current LLMs cannot capture the nuances of local dialects and nuances. The LLM do have inherently imbibed the cultural nuances but there is a loss of information in between which I felt is lost forever as LLM are trained and retained with reinforcement feedback loops. Nuance in language isn't just about which word comes next, but the subtle flavor, connotation, idiom, or cultural reference associated with words and phrases in a specific context or dialect.

These subtleties might be diluted or averaged out when a model is trained on a vast, diverse corpus primarily through a token-prediction objective. The discrete nature of tokens might be insufficient to fully encode the continuous spectrum of meaning and connotation found in nuance.

So, Technically , The crucial difference here is the prediction target. Instead of predicting the raw pixels or raw tokens of the masked-out part, the model predicts its embedding. This forces the model to:

Learn a good encoder that creates meaningful embeddings.

Learn how to predict in the abstract, semantic space of embeddings, rather than the concrete space of raw data.

Potentially learn richer relationships between different parts of the data than simply what comes next sequentially.

Plausible Reasons as to Why:

Efficiency: Generating data token by token can be computationally expensive and slow, especially for long sequences or high-dimensional data (like images). Learning representations in an embedding space and making predictions within that space can be much more efficient.

Learning Deeper Meaning: Generative models, especially simple auto-regressive ones, can sometimes just learn superficial correlations. Joint-embedding models, particularly predictive ones, are hypothesized to learn richer, more abstract, and hierarchical representations of the underlying data structure and relationships, focusing on what is being represented rather than just how to generate the next piece. They learn compatibility.

Avoiding Hallucinations: Auto-regressive generation can compound errors. If a model makes a slightly wrong prediction early in a sequence, subsequent predictions are based on that error, leading to drift and hallucination. Predictive joint-embedding models might be less prone to this because they predict representations based on a global context, not just the immediately preceding tokens.

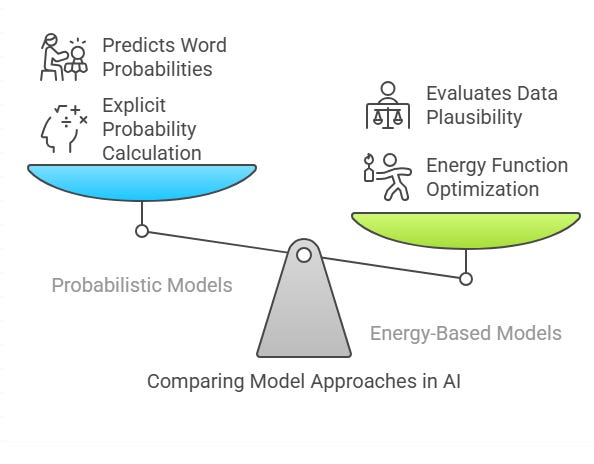

Yann LeCun : Abandon probabilistic models in favour of energy-based models

What it implies: Move away from models that explicitly calculate and optimize probability distributions over outputs (like predicting the probability of each word in the vocabulary at every step). Instead, use models that define an "energy function," where low energy corresponds to plausible or correct data configurations, and high energy corresponds to implausible or incorrect ones. Learning involves making correct configurations that have low energy.

Plausible Reasons as to Why:

Flexibility and Efficiency in High Dimensions: Explicitly normalizing probability distributions (needed in some probabilistic models) can be intractable in high-dimensional spaces (like the space of all possible images or long text sequences). Energy-based models avoid this by learning a score function (the energy) rather than a normalized probability distribution.

Learning Compatibility: Energy-based models are well-suited for learning criteria or constraints – learning why certain data configurations are valid or compatible, which is seen as crucial for understanding the world and predicting how things fit together. Probabilistic models focus on likelihood, which is related but doesn't directly capture underlying constraints in the same way. LeCun argues that the world doesn't provide probabilities; it provides outcomes with certain compatibilities or "energies."

What do I think ?

Recently I was building a Gurmukhi [impure] to Gurmukhi [pure] translation framework. I found that I need to combine two existing embeddings (as I don't want to reinvent the wheel) that have similar dimensions but different vector representations on the same text . I combined two embeddings and updated the joint embeddings with new (fresh gurmukhi data).

In a nutshell , Here's why my approach aligns well with the concepts recommended by Yann LeCun.

Focus on Representations: My strategy centers entirely around creating a better representation of the Gurmukhi text by working with embeddings. This directly resonates with the idea of shifting focus from merely predicting tokens to building rich, meaningful vector spaces.

Leveraging Existing Knowledge: I'm choosing not to "reinvent the wheel" by using pre-trained embeddings. These embeddings already encode general linguistic knowledge learned from potentially large corpora (even if not perfectly suited for your specific task).

Combining Different Perspectives: The fact that the two embeddings have "different vector representations on the same text" is a key strength. Different pre-training processes or architectures often capture different facets of language (e.g., one might be better at syntax, another at semantics, another at character-level patterns). By combining them, you're potentially creating a more comprehensive and robust initial representation that captures a wider range of linguistic features relevant to Gurmukhi. This combined embedding space could potentially capture nuances that neither single embedding space holds alone.

Task-Specific Adaptation: Updating the joint embeddings with "new (fresh Gurmukhi data)" specifically related to the "impure" vs. "pure" distinction is crucial. Pre-trained embeddings are general; they need to be adapted to the specific patterns and nuances of your target task. By fine-tuning on your relevant data, you are shaping this combined embedding space to become highly discriminative and informative for the purpose of distinguishing and transforming "impure" into "pure" Gurmukhi. This process allows the embeddings to better capture the subtle differences, common errors, or dialectal variations present in your specific "impure" data and their corresponding "pure" forms.

Implicitly Addressing Nuance: The task ("impure" to "pure" translation) is inherently about handling linguistic nuance, variation, and potentially errors. By creating specialized embeddings tailored to this specific task through combining and fine-tuning, you are directly equipping your downstream model (whatever architecture you feed these embeddings into) with a richer, more contextually relevant input representation that is better equipped to understand and process these nuances than generic embeddings might be.

While I might still use a model architecture (like a sequence-to-sequence model) that performs token prediction at the output stage, your focus on improving the input representation through this combined and fine-tuned embedding strategy is completely aligned with the philosophy of emphasizing learned representations as a powerful way to handle complex linguistic tasks, especially in contexts where capturing nuance and domain-specific patterns is critical, as is often the case with low-resource languages and dialects. The approach is a practical and effective way to leverage existing resources while specializing them for the unique demands of your Gurmukhi translation task.

Yann LeCun : Abandon contrastive methods in favour of regularised methods

What it implies: Stop relying heavily on training methods that work by pushing positive examples closer and negative examples further apart in embedding space (contrastive learning). Instead, use methods that employ regularization techniques, often involving predicting masked data or enforcing certain properties on the learned representations without needing to explicitly sample negative examples for every step. Predictive coding methods (like JEPA) fall under this, predicting masked representations.

What do I think , Yes, These methods train models by defining "positive pairs" (examples that should be considered similar, e.g., two different augmented versions of the same image, or a piece of text and a related image) and "negative pairs" (examples that should be considered dissimilar, e.g., an image and a random unrelated piece of text). The training objective is typically a contrastive loss function that mathematically pushes the representations (embeddings) of positive pairs closer together in the embedding space and pulls the representations of negative pairs further apart. Think of SimCLR, CLIP, or earlier Siamese Networks.

Contrastive learning has been very successful in self-supervised learning for learning discriminative representations. It teaches the model to distinguish between things. However, its core mechanism relies on having a batch of data and explicitly calculating the similarity of a positive pair relative to a potentially large number of negative pairs within that batch. This requires careful and often computationally expensive sampling of negative examples, and the performance can be highly sensitive to the choice and number of negatives. The model learns by knowing what isn't the same or related, as much as what is.

LeCun advocates for methods that learn representations by performing a predictive task on the data itself, often involving masking out parts and predicting the masked information (or its representation) based on the unmasked parts. The "regularization" aspect comes from the nature of the prediction task itself, forcing the model to learn meaningful, structured representations. JEPAs are a prime example: predict the embedding of a masked part based on the embedding of the unmasked part.

The key difference here is the training signal. Instead of comparing distances between pairs (positive vs. negative), the model is trained to accurately predict missing information or its representation. This objective forces the model to build an internal model of the data's underlying structure, dependencies, and context. It's not just learning to distinguish A from B and C; it's learning what A is composed of and how its parts relate, or what part B is likely to be given part A. This often avoids the need for explicit, hard negative sampling.

Some things cannot be just positive or negative, they are as it is, they need to be represented 'as they are. My intuition aligns with the motivation behind favoring predictive, regularized methods like JEPAs over purely contrastive ones.

The Limitation of Binary: In reality, complex data like language, isn't always best simplified into just "positive" (this is similar) or "negative" (this is dissimilar) pairs. Concepts have nuanced relationships, multiple attributes, internal structure, and exist within a complex context. Trying to learn representations solely by pushing and pulling based on pairwise similarity/dissimilarity might inherently lose some of this richness.

Representing "As They Are": Predictive methods, by contrast, aim to capture the internal coherence and structure of the data.

When a JEPA predicts the embedding of a masked-out section of text based on the surrounding text embedding, it has to learn the semantic flow, grammatical structure, topic consistency, and perhaps even stylistic elements that dictate what kind of meaning belongs in that masked spot given the context.

It's learning to represent the data (x) in a way (via embeddings) that facilitates reconstructing or predicting its inherent properties and internal relationships, rather than just its position relative to other, potentially arbitrary, negative examples.

The model isn't just learning that "cat" is different from "dog" (though a good representation would capture that). It's learning that given the phrase "The cat sat on the...", the masked word's representation should encode something plausible that fits grammatically and semantically, like "mat" or "rug." This requires understanding the internal logic of the sequence and the concepts involved – understanding the data "as it is" in context.

LeCun's argument is that training models to deeply understand data requires objectives that go beyond simple pairwise comparisons (contrastive) and instead force the model to build an internal model of the data's structure and dependencies by predicting its own masked-out parts or enforcing other structural regularities (predictive/regularized). This approach aligns with your feeling that capturing the true nature of data ("as they are") requires a representation that reflects its inherent complexity and internal coherence, which predictive tasks are better suited to foster than purely discriminative, contrastive ones that rely on binary positive/negative relationships.

Plausible Reasons as to Why :

Efficiency of Negative Sampling: Contrastive methods often require careful and potentially massive sampling of "negative" examples (data that should be far apart). This can be computationally expensive and tricky to get right, especially as the complexity of the data increases.

Learning Comprehensive Representations: Regularized methods, particularly those based on prediction (like predicting masked parts of data), are argued to be more efficient at learning comprehensive, hierarchical representations of the data without needing to explicitly compare every possible pairing. They learn by understanding the internal structure and predictability of the data itself.

Yann LeCun : Abandon Reinforcement Learning in favour of model-predictive control

What it implies: Shift the focus from training agents primarily through trial and error using rewards and penalties (Reinforcement Learning - RL) to using control strategies that rely on an explicit internal "model" of the environment to predict the outcome of actions and then choose actions based on these predictions (Model-Predictive Control - MPC).

Plausible Reasons as to Why:

Planning and Reasoning: True intelligence requires the ability to plan and reason about hypothetical future states. This is inherently difficult for model-free RL, which learns direct stimulus-response mappings or value functions without understanding the underlying dynamics. MPC uses a learned model to simulate possibilities, which is essential for planning.

Sample Efficiency: Model-free RL is notoriously sample-inefficient; it often requires vast amounts of interaction with the environment to learn a good policy. Model-based approaches, by simulating internally, can potentially explore possibilities much more efficiently.

Understanding Cause and Effect: Building and using a world model helps the agent learn cause-and-effect relationships, which is fundamental to understanding the world, something current RL agents often lack.

Yann LeCun : Use RL only when planning doesn’t yield the predicted outcome, to adjust the world model or the critic

What it implies: This refines the previous point. RL isn't completely useless, but its role should be specific and secondary. It should be used not for directly learning actions, but for improving the internal model of the world or the evaluation function (critic) used in planning, particularly when the model's predictions fail to match reality. This is about learning from prediction errors.

Plausible Reasons as to Why:

Model Improvement: When reality deviates from the model's prediction, this is a signal that the model is inaccurate. RL, through experiencing this discrepancy and perhaps a resulting penalty, can provide a learning signal specifically for updating and improving the internal world model.

Learning Costs/Values: RL can still be useful for learning the "cost" or "value" associated with different states or outcomes – the "critic" part of the system that helps the agent choose which predicted future is best, even if the prediction itself comes from the model.

Prioritizing Model-Based Learning: This approach prioritizes building a predictive model of the world as the primary learning objective, using RL in a more targeted way to refine that model when necessary.

If you are interested in human-level AI, don’t work on LLMs

What it implies: A strong concluding statement summarizing the entire perspective. It argues that focusing solely on developing larger or more sophisticated versions of the current LLM architecture is a misguided effort if the goal is to achieve AI with capabilities comparable to human intelligence.

Plausible Reasons as to Why :

Fundamental Architectural Limits: Based on the preceding points, the argument is that current LLMs' fundamental design (auto-regressive, probabilistic next-token prediction, lack of explicit world model, lack of planning/reasoning mechanisms) makes them inherently incapable of reaching human-level understanding, planning, and robust interaction with the world. They are seen as mastering linguistic form but not the underlying reality the language describes.

Focus on Surface vs. Deep Learning: LLMs excel at processing and generating text based on patterns learned from the surface form of language. Human intelligence, however, involves understanding the physical world, causality, intentions, and planning in a multi-modal environment, using language as just one tool among many. The critiques suggest LLMs are stuck learning surface correlations rather than deep, predictive models of reality.

Inefficient Learning: Humans (and animals) can learn incredibly quickly from limited experience and observation by building internal models of how things work. Current LLMs require colossal amounts of data and computation, which critics argue points to an inefficient learning paradigm for general intelligence.

In summary, these recommendations stem from a belief that current LLMs are primarily sophisticated text processors that lack the fundamental mechanisms for true understanding, planning, and robust interaction with the physical and social world that characterize human intelligence. The proposed alternative architectures and learning paradigms are aimed at building systems that learn predictive world models and can use them for intelligent behavior beyond just generating plausible language.