The Deductive Mirage : Financial LLMs

How Big Data Blurs Lines in the Age of LLMs – Lessons from the Financial World

We live in an era captivated by the seeming magic of Large Language Models (LLMs). These digital oracles, trained on oceans of text, can generate prose with astonishing fluency, answer complex queries, and even mimic human creativity.

They appear to reason, understand, and even deduce. But beneath this impressive façade lies a fundamental question, starkly highlighted by the very scale of these systems: are we being fooled by induction?

Are we mistaking incredibly sophisticated pattern recognition for genuine deductive reasoning, especially when the data samples become monstrously large?

To understand this critical distinction, let's start with the bedrock of logic: first principles. Imagine building a house. Deduction is like following a blueprint: you have the general rules of architecture (strong foundations, load-bearing walls, weatherproof roofing), and you apply them step-by-step to construct a specific building.

If you follow the rules correctly, the outcome – a stable house – is guaranteed, within the limits of material properties and execution. Induction, on the other hand, is like observing many houses in a neighborhood. You notice that most houses have sloped roofs in rainy climates, so you inductively conclude that sloped roofs are good for rainy climates. You're generalizing from specific observations to a broader principle.

However, induction isn't guaranteed. You might find a flat-roofed house in the same area that also works, or perhaps your initial observation was skewed by the sample of houses you happened to see.

Now, envision LLMs as vast, incredibly complex inductive machines. They are not programmed with explicit deductive rules like architectural blueprints. Instead, they are fed colossal datasets – the internet's textual output – and learn by observing patterns.

They identify statistical relationships between words, phrases, and concepts. This is pure induction on a scale never before imagined.

And here’s where the illusion begins: when your sample is monstrously large, induction can fool you as it becomes nearly indistinguishable from deduction.

Imagine a library so vast it contains every book ever written, every article published, every conversation transcribed. If you ask it a question, it doesn't "deduce" the answer by applying logical rules in a traditional sense.

Instead, it rapidly scans its internal representation of this colossal library, identifying patterns and relationships that correlate with your question. It then generates an answer that statistically aligns with these patterns.

To an observer, the answer might appear reasoned, logical, even deductive. But fundamentally, it is an incredibly sophisticated form of pattern matching and statistical extrapolation.

This "deductive mirage" is particularly pertinent – and potentially perilous – in domains like finance, stock markets, and market analysis.

These are environments characterized by vast quantities of historical data, complex interdependencies, and a constant search for predictive edges.

Let's explore this through the lens of the financial world.

The Stock Market: A Playground for Inductive Illusions

The stock market is a data-rich environment par excellence. Decades of stock prices, trading volumes, economic indicators, news articles, and social sentiment data are readily available. Financial analysts and quantitative traders have long sought to find predictive patterns within this data, using statistical methods and machine learning to gain an advantage. LLMs, with their unprecedented pattern recognition capabilities, represent the latest and perhaps most potent tool in this quest.

Imagine using an LLM to analyze historical stock market data. You feed it years of stock prices, interest rate changes, inflation figures, company earnings reports, and even news headlines.

The LLM diligently sifts through this data, identifying intricate statistical correlations. It might discover, for instance, that historically, when interest rates rise sharply and consumer confidence declines, technology stocks tend to underperform the broader market.

Based on this inductive learning, the LLM might generate a seemingly "deductive" prediction: "Given the current economic climate with rising interest rates and declining consumer confidence, we expect technology stocks to underperform in the coming quarter."

This prediction sounds logical, reasoned, almost like a deductive conclusion derived from established economic principles.

But is it truly deduction? From a first-principles perspective, no. The LLM isn't applying fundamental economic laws to derive this prediction. It's extrapolating a statistical pattern observed in past data. It's saying, "

In the past, when conditions similar to these existed, technology stocks tended to underperform. Therefore, it is likely that they will do so again." This is sophisticated induction, not deduction.

Let's consider other examples in finance where this inductive illusion can be potent:

Financial Modeling: LLMs can be used to build incredibly complex financial models. Imagine training an LLM on millions of loan applications, repayment histories, and various socioeconomic data points.

The LLM could develop a highly sophisticated model for predicting credit risk. This model, appearing to "deduce" creditworthiness based on a multitude of factors, is fundamentally inductive.

It's learned to identify patterns in past loan performance data to predict future outcomes.

Automated Market Analysis Reports: LLMs can generate market analysis reports that mimic the style and language of experienced human analysts. They can summarize vast amounts of financial news, earnings reports, and market data to produce insightful-sounding reports, complete with "deductive" reasoning and investment recommendations.

However, these reports, even if impressively coherent, are built on statistical patterns derived from existing reports and market data, not on a genuine understanding of fundamental economic principles or future market dynamics.

Algorithmic Trading Strategies: Imagine an LLM trained to develop algorithmic trading strategies. It analyzes years of market data, identifying patterns and developing algorithms that have historically been profitable.

These algorithms, appearing to "deduce" optimal trading decisions based on market conditions, are fundamentally inductive. They are exploiting statistical anomalies and patterns observed in past market behavior, hoping these patterns will persist in the future.

Why Induction Can Fool Us at Scale

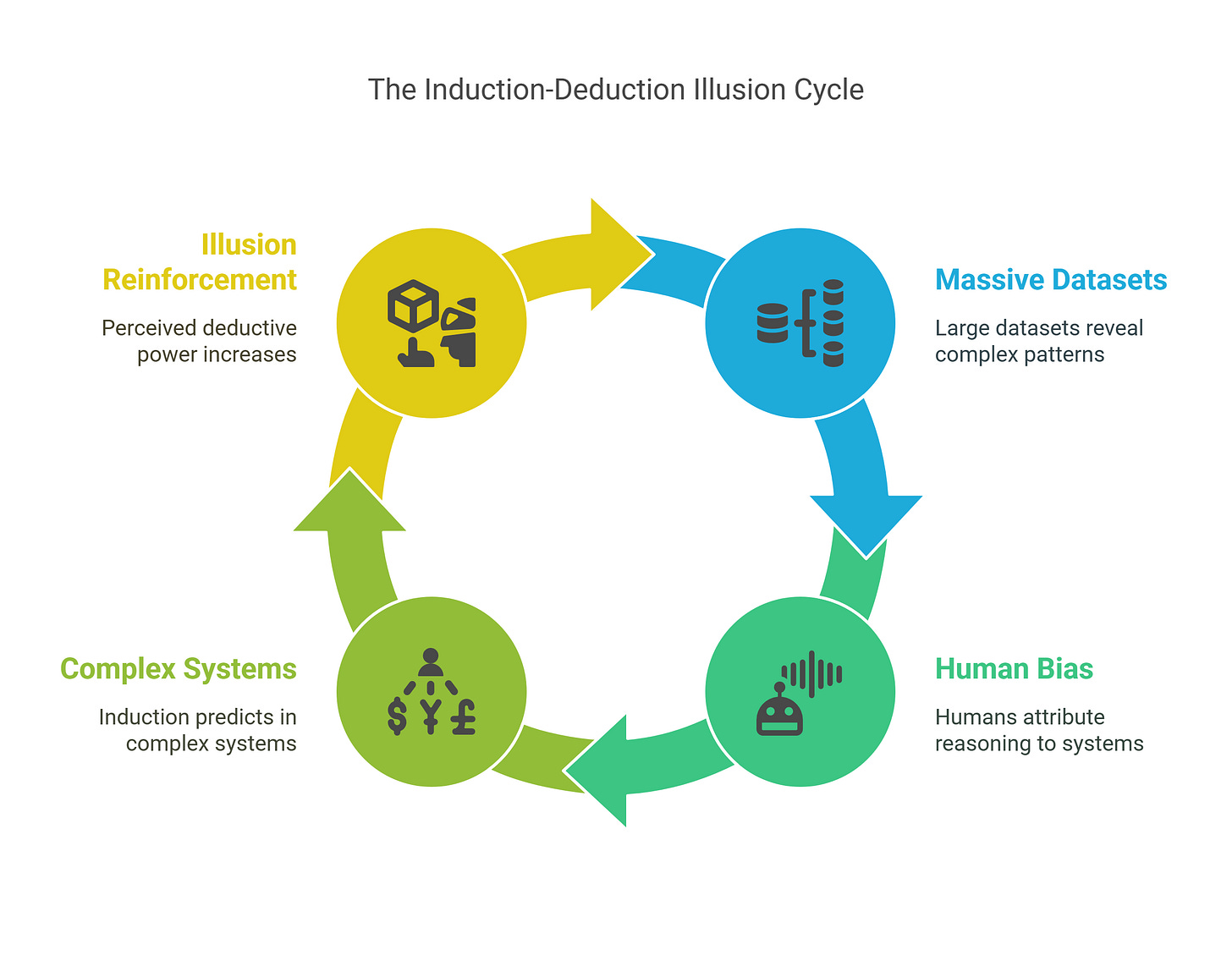

The illusion that large-scale induction can become indistinguishable from deduction stems from several key factors:

Statistical Power of Massive Datasets: The sheer volume of data LLMs are trained on allows them to identify incredibly nuanced and complex patterns that would be impossible for humans to discern or for smaller machine learning models to capture.

This depth of pattern recognition creates the impression of deeper understanding and more reliable predictions.

Human Cognitive Biases: We humans are prone to anthropomorphization. When we see a system generating coherent, reasoned-sounding outputs, we tend to attribute human-like understanding and reasoning capabilities to it. We easily fall into the trap of believing that because it sounds deductive, it is deductive.

The Nature of Complex Systems (like Financial Markets): Financial markets, and many other real-world systems, are inherently complex and not governed by simple, deterministic rules.

They are driven by a multitude of interacting factors, many of which are unpredictable or even unknowable. In such systems, inductive models can often achieve a surprisingly high degree of predictive accuracy within the observed data distribution. This further reinforces the illusion of deductive power.

The Danger of the Deductive Mirage in Finance

The allure of the deductive mirage in financial applications is potent, but also fraught with danger. Mistaking inductive pattern recognition for true deductive reasoning can lead to significant risks:

Overconfidence and False Certainty: Believing that an LLM-generated prediction is based on deductive logic can breed overconfidence. Investors might place excessive faith in these predictions, assuming a level of certainty that is not warranted.

This can lead to riskier investment decisions and amplified losses when the inductive patterns inevitably break down.

Vulnerability to "Black Swan" Events: Financial markets are notorious for "Black Swan" events – unpredictable, high-impact events that lie far outside historical data distributions. Inductive models, trained on past data, are inherently vulnerable to these events. If an LLM-based trading algorithm has only learned from historical data, it will be completely unprepared for a truly novel market shock. The "deductive" facade crumbles when confronted with chaos the data never saw coming.

Lack of Causal Understanding: LLMs, being pattern-matching machines, do not possess genuine causal understanding. They can identify correlations – that event A often precedes event B – but they don't necessarily understand why A causes B (or if it's even a causal relationship at all, versus mere correlation). In finance, understanding causal relationships is crucial for making sound long-term decisions and navigating complex market dynamics.

Relying solely on inductive pattern recognition without causal insight is akin to navigating a ship using only historical wind patterns, without understanding the fundamental principles of sailing or meteorology.

Analogy: The Technical Analyst's Chart and the LLM’s Library

Think of a seasoned technical analyst who meticulously studies stock charts. They identify patterns like "head and shoulders," "double tops," and "moving average crossovers" that have historically correlated with future price movements.

They develop trading strategies based on these patterns, appearing to "deduce" future price action from chart formations. However, technical analysis, at its core, is inductive. It relies on observing patterns in past price data and generalizing them to the future.

An LLM in finance is like a technical analyst with access to the entire history of financial data, news, and analysis, processed at lightning speed. It can identify far more complex and subtle patterns than any human analyst could ever hope to.

But just as the technical analyst’s chart patterns are ultimately inductive generalizations, so too are the patterns discovered and exploited by LLMs. Neither guarantees future success. The market can always deviate from historical patterns.

First Principles Revisited: Induction Remains Induction

Returning to our first principles, it is crucial to remember that no matter how vast the dataset, no matter how sophisticated the model, induction remains induction. Increasing the sample size does not magically transform inductive reasoning into deductive certainty.

The line may blur, the illusion may become incredibly convincing, but the fundamental difference remains.

In the context of financial markets and similar complex, data-rich domains, this distinction is not merely academic. It is a critical factor in responsible AI development and deployment. We must approach LLM-generated insights, predictions, and recommendations with a healthy dose of skepticism, recognizing their inherent limitations as inductive systems. While they can be powerful tools for pattern discovery and analysis, they should not be mistaken for oracles of deductive certainty.

Conclusion: Navigating the Deductive Mirage with Eyes Wide Open

The rise of LLMs has gifted us with remarkable tools capable of processing and generating language with unprecedented sophistication. However, the very scale of their inductive learning creates a compelling illusion, making pattern matching appear deceptively like deductive reasoning.

This illusion is particularly potent in data-rich, pattern-driven fields like finance. While LLMs can uncover intricate patterns in financial data and generate seemingly deductive predictions, it is crucial to remember that these are fundamentally inductive extrapolations, not guaranteed deductive conclusions.

In the financial world, mistaking this inductive mirage for deductive reality can lead to overconfidence, vulnerability to unforeseen events, and a lack of genuine causal understanding.

The key to harnessing the power of LLMs responsibly in finance, and other complex domains, lies in recognizing their true nature – powerful inductive instruments, not deductive oracles. By maintaining a critical perspective, understanding the limitations of induction, and prioritizing human oversight and domain expertise, we can navigate the deductive mirage and leverage the strengths of LLMs while mitigating the inherent risks, ensuring that these powerful tools serve as valuable aids, not unreliable substitutes for human judgment and understanding.

Learn more on how to build LLMs and optimize for truthfulness rather than just pattern matching

Join our courses at Ecologic Corporation or Simply Join our Public forums for deeper discussions .